Ethical Considerations & Bias Detection in Financial AI

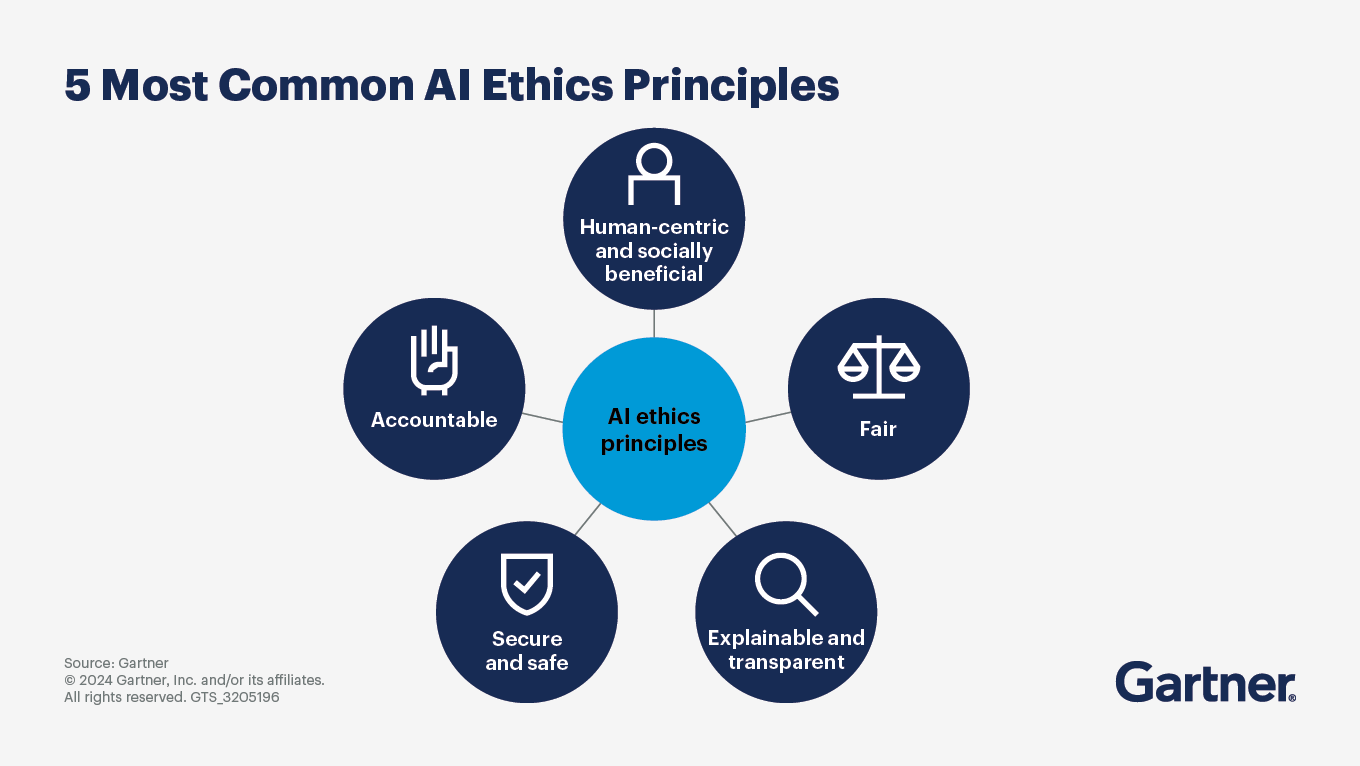

Ethics in AI is generally overlooked; however, the reality is that it is a necessity in the growing field of AI. Financial AI systems can inadvertently perpetuate unintentional biases present in historical data, leading to unfair outcomes and choices. This is derived from AI’s AI-based data that is generally chosen by humans and thus, subject to biases (take, for example, left-hand and right-hand AI models). For example, a loan approval algorithm unfairly rejects applications from certain demographics if the training data reflects discriminatory practices. To remedy this negative, AI developers have the responsibility to implement bias detection systems, such as fairness metrics and adversarial training. Specifically, bias identification systems should be used to identify and quantify disparities in the model, to identify real biases implemented in the system. Then, in order to guarantee the system is viable for use, adversarial training should be used to test the model against intentionally biased data and improve robustness. Furthermore, transparency is required to prevent biases and widespread use–stakeholders must be able to directly see how AI models take data and make decisions. Ethical AI in finance requires a balance between innovation and social responsibility–ensuring that algorithms not only serve all customers efficiently but also fairly and equitably. Financial Institutions must also establish ethical guidelines and governance frameworks to oversee the development and use of their specific AI systems. Again, ethical considerations must be considered to ensure financial institutions can build trust with their customers and create a more conclusive financial ecosystem.

Source: Gartner